Opinion

Echo Chambers in Social Media: Part 2 - Platform Dynamics and Real-World Impacts

How YouTube and Facebook shape echo chambers, fueling polarization and misinformation

3 MIN READ

By Timmy

Published:

| Updated:

In Part 1, we explored the definition of echo chambers and how they form through algorithms, confirmation bias, and self-selection. In Part 2, we examine how echo chambers manifest on specific social media platforms—YouTube and Facebook—through research and case studies. We also discuss their broader implications for political polarization and misinformation.

TL;DR

Echo chambers vary across platforms: YouTube creates mild echo chambers with a slight conservative bias and Facebook amplifies divisive content. These dynamics fuel political polarization and spread misinformation, though algorithms can also increase exposure to diverse news.

Platform-Specific Insights

YouTube: Mild Echo Chambers, Subtle Bias

A 2022 study by NYU’s Center for Social Media and Politics, published via the Brookings Institution, investigated YouTube’s recommendation algorithm. Researchers found that the algorithm creates mild echo chambers, pushing liberals toward slightly more liberal content and conservatives toward slightly more conservative content. However, the effect is small, with substantial overlap in recommendations across ideologies. Notably, the algorithm tends to nudge all users slightly rightward, a conservative bias previously undocumented. While fears of YouTube leading users into extremist “rabbit holes” are largely unfounded—only 3% of users encountered such paths—the platform’s scale means even small effects can impact millions.

Facebook: Amplifying Division

Research published in 2023 in Science and Nature highlighted Facebook’s role in creating ideological bubbles. The platform’s algorithm amplifies divisive content that generates strong emotional reactions, strengthening echo chambers, particularly for conservative users. About half of the posts users see come from like-minded sources, and conservatives engage more with political news, including content flagged as untrustworthy. This creates a feedback loop where engagement-driven algorithms promote polarizing content over neutral or diverse perspectives.

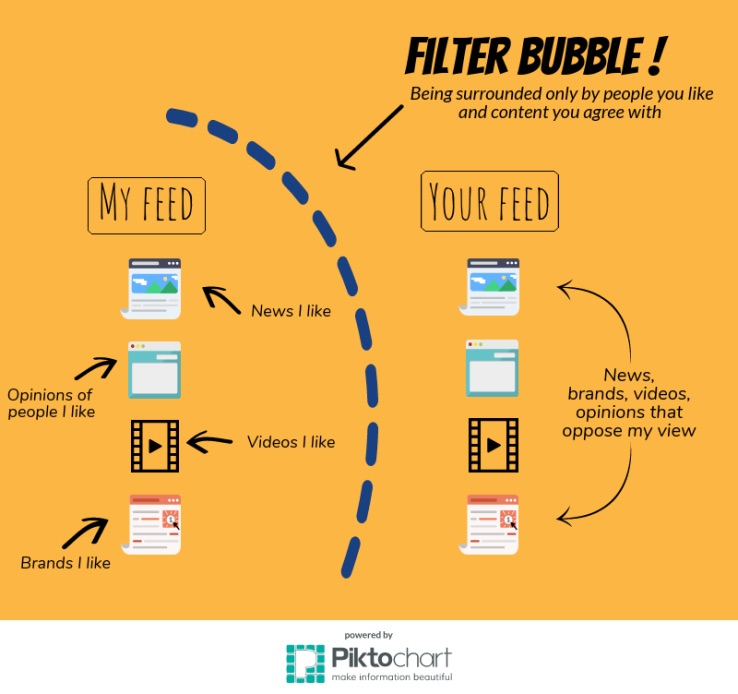

Image created by Evelyn Lo, via Piktochart, information sourced from Tobias Rose.

Filter Bubbles vs. Reality

Contrary to the “filter bubble” hypothesis, which suggests algorithms trap users in narrow information silos, research from the Reuters Institute indicates that social media can increase exposure to diverse news. Automated serendipity—encountering unexpected sources—and incidental exposure while using platforms for other purposes help diversify content, especially for younger users or those less interested in news. However, homophilic clusters (groups of like-minded users) still dominate, with Facebook showing higher ideological segregation than platforms like Reddit.

Real-World Implications

Political Polarization

Echo chambers reinforce existing beliefs, amplify extreme positions, and reduce opportunities for constructive dialogue. This deepens political divides, making opposing viewpoints seem rare or illegitimate and hindering compromise.

Misinformation Spread

By creating environments where false information goes unchallenged, echo chambers facilitate the spread of misinformation. Trust networks within these chambers increase acceptance of unverified claims, while limited exposure to fact-checks exacerbates the problem.

Looking Ahead

In Part 3, we’ll explore strategies to break out of echo chambers, from individual actions like diversifying media consumption to platform-level solutions like algorithm transparency. By understanding these dynamics, we can work toward a healthier information ecosystem.

This article is part of a three-part series on echo chambers in social media, compiled from recent research and expert analysis.