AI

DeepSeek Unveils V3.1 Upgrade, Doubling Context Length for Enhanced AI Performance

3 MIN READ

By Timmy

Published:

| Updated:

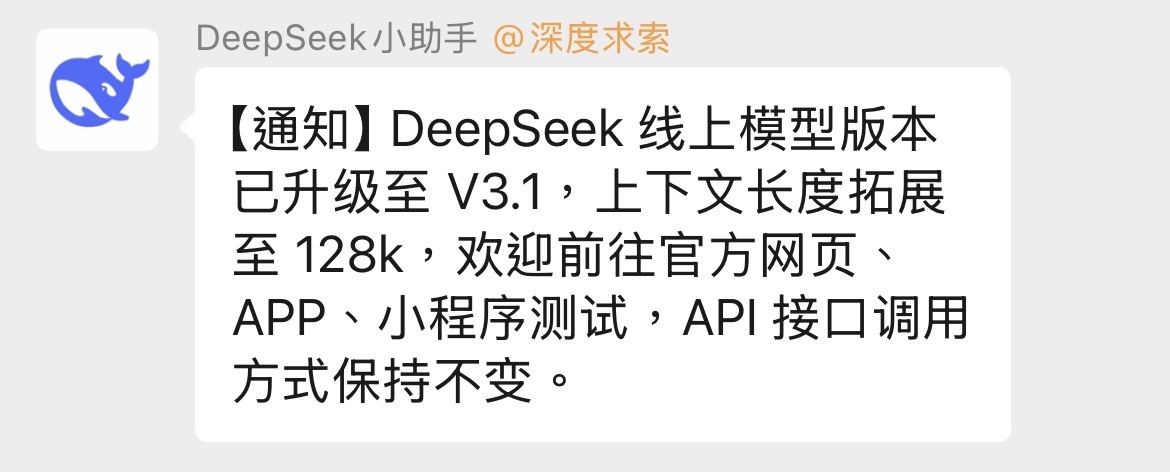

Chinese artificial intelligence company DeepSeek has quietly upgraded its online large language model to version 3.1, expanding the context length to 128,000 tokens and boosting capabilities in reasoning, programming, and tool usage. The update, announced on August 19, 2025, via the company's official WeChat group, marks a significant iteration just five months after the release of DeepSeek-V3-0324 in March.

Translation of the announcement:

[Notice] The DeepSeek online model version has been upgraded to V3.1, and the context length has been expanded to 128k. Welcome to the official website, APP and applet testing. The API interface call method remains unchanged.

The upgrade allows users to process longer inputs, such as entire code repositories or extensive documents, without losing coherence in multi-turn conversations. This addresses previous limitations on DeepSeek's platform, where the context was capped at 64,000 tokens, though the open-source version already supported 128,000. The knowledge cutoff date remains July 2025, and the model does not include multimodal features like image or video processing. DeepSeek emphasized that the API interface remains unchanged and compatible with OpenAI standards, enabling seamless integration for developers.

According to announcements shared the V3.1 model features major improvements in reasoning performance, front-end development skills, and smarter tool-use capabilities. It also enhances language output with better-structured content, such as tables and lists, and improves understanding of physical phenomena for scientific applications. Users from X, tested the model and reported elevated front-end abilities, stating, "Deepseek V3.1’s front-end ability may have improved," after successfully generating code without common issues like font size errors.

DeepSeek, founded in China, has gained recognition for its open-source Mixture-of-Experts (MoE) architecture, with the base V3 model boasting 671 billion total parameters and activating 37 billion per token. The company has committed to an open-source strategy, contributing to global AI advancements despite facing international sanctions that limit access to advanced computing resources. The initial V3 was introduced in December 2024, followed by the V3-0324 variant in March 2025, which focused on efficiency in large language models.

Users can access V3.1 immediately through DeepSeek's official website, mobile app, and WeChat mini-program for testing. However, model weights have not yet been uploaded to platforms like Hugging Face, with some observers anticipating an imminent release. Community reactions on X and Reddit have been positive, with developers praising the rapid iteration and potential for applications in enterprise, academic, and personal settings.

This update reinforces DeepSeek's position in the competitive AI landscape, where longer context windows and specialized enhancements are key to handling complex tasks. As the company continues to iterate, industry watchers will monitor for the full open-source availability and further performance benchmarks.