AI

DeepSeek Unveils Enhanced R1 Model with Reduced Hallucinations

Latest DeepSeek-R1-0528 Release Boosts Accuracy and Developer Features While Maintaining Open-Source Commitment

2 MIN READ

By Timmy

Published:

| Updated:

Chinese AI firm DeepSeek has released an upgrade to its reasoning model, DeepSeek-R1-0528, which demonstrates improved capabilities in mathematical reasoning and problem-solving.

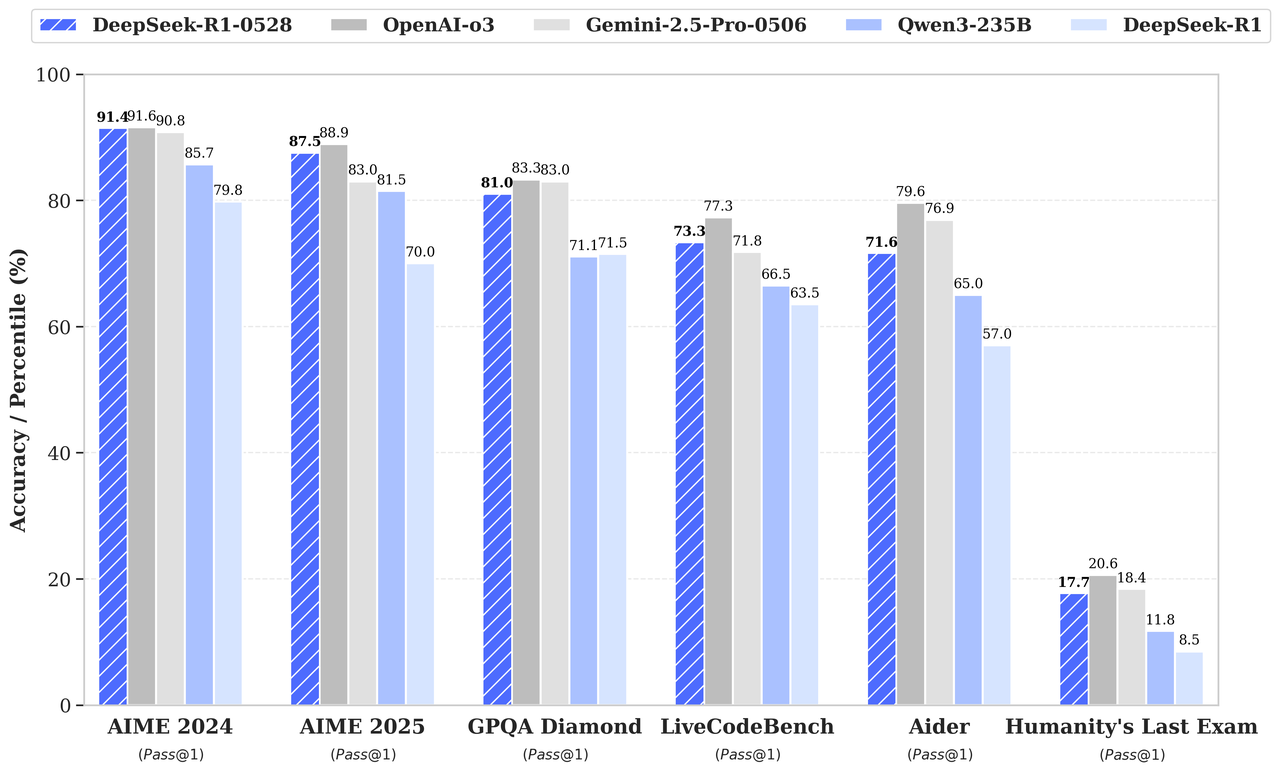

The updated model shows enhanced performance in benchmark tests, with accuracy levels approaching those of OpenAI's o3 and Google's Gemini-2.5-Pro. On the AIME 2025 test, the model's accuracy increased from 70% to 87.5% compared to its previous version.

A significant improvement in the new version is the reduction of hallucinations—instances where AI generates incorrect or fabricated information. According to DeepSeek, the updated model reduces hallucination rates by approximately 45-50% in scenarios such as rewriting, summarization, and reading comprehension compared to the previous version.

Technical specifications indicate the model now processes an average of 23,000 tokens per question, up from 12,000 tokens in the earlier version, allowing for more thorough analysis during problem-solving.

DeepSeek has also released a smaller 8B parameter version called DeepSeek-R1-0528-Qwen3-8B, which outperforms comparable models of similar size by 10% on the AIME 2024 benchmark.

The company has made the 685B parameter model available as open-source under MIT License through ModelScope and Huggingface repositories, maintaining a 64K token context length for standard implementations while supporting 128K tokens in its open-source version.

Users can access the updated model through DeepSeek's official website, mobile app, and mini-program by enabling the "Deep Thinking" feature.